hiBot: Social Robot (2017)

A Robot Friend

Robots are capable of performing many types of difficult tasks, but does a mindset of technological servitude contribute to a non-social relationship between people and robots? If our only interactions with robots involve us giving them verbal commands, robots will never assimilate into our daily lives because we will neglect to treat them like they are as intelligent or independent as they attempt to be. How then can we deviate from a task-oriented human-robot relationship, such that a robot could help a human just by being a good and attentive listener, not unlike a regular friend?

With that in mind, our team created hiBot, the social robot whose main purpose is to subtly and attentively acknowledge you as a friend would. It takes notices when you enter a room and subtly shifts its gaze to follow you if prompted by a greeting or direct eye contact. hiBot is a friend that lives on your desk and keeps you company like a tabletop roommate doing their own thing unless you give verbal or non-verbal cues that you want to chat. In doing so, it fills in the gaps left behind by robots that are very effective at performing their directed tasks but not as much at providing self-driven companionship or empathy.

Final Video Documentation

First Steps

At the start, Gareth Chen, Macklin Fluehr, and I investigated independently and shared with each other our ideas for what a positive yet non-obtrusive robotic presence could look like. We landed on a non-humanoid listening-focused robot in agreement that if our aim was to answer the original question of human connection/comfort, we should focus on a simple, pared-down interaction.

Project Team L-R: Jeremy Joachim, Macklin Fluehr, Gareth Chen, hiBot

Technical Design

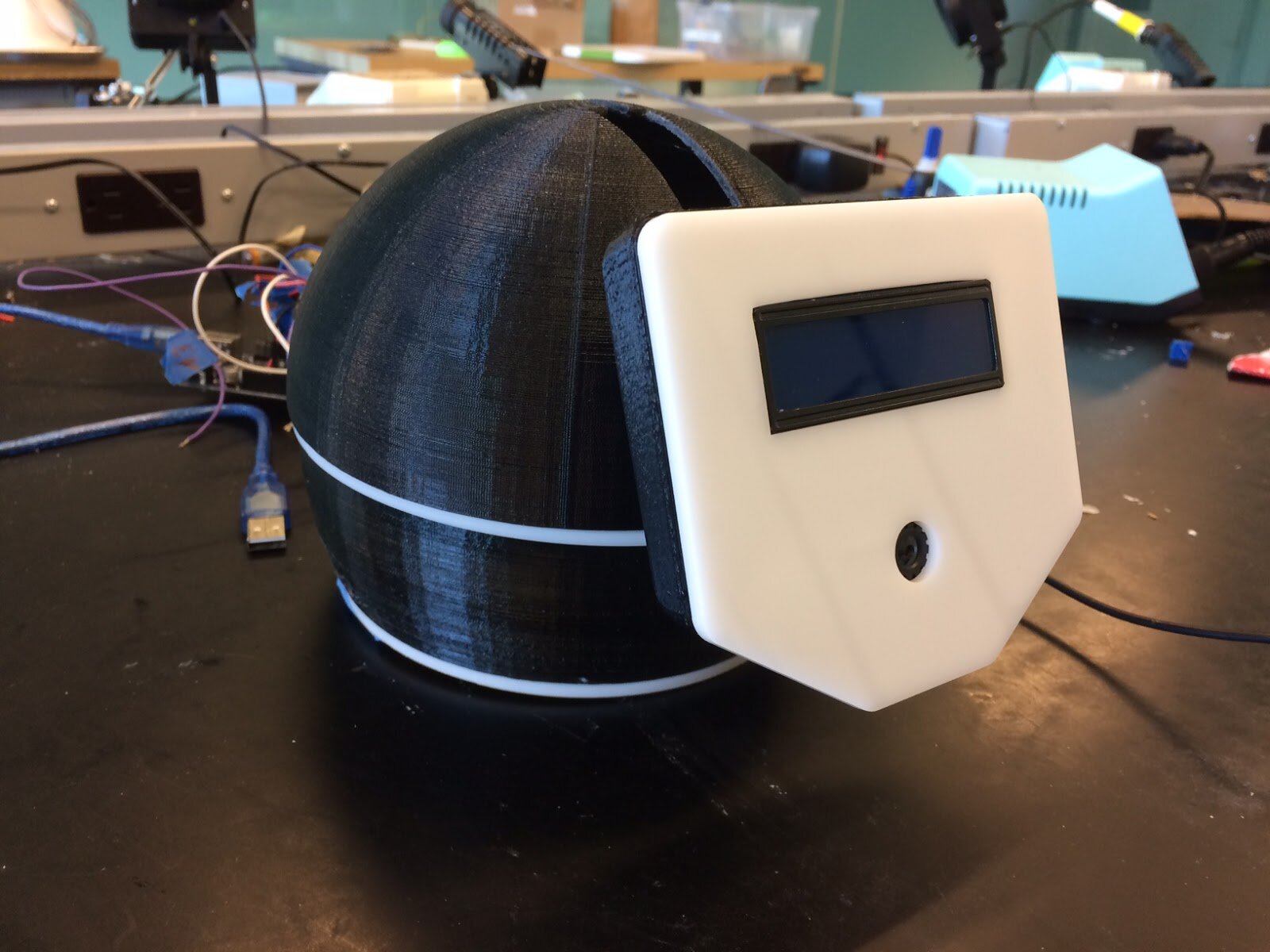

From there, we decided hiBot would be able to see (Logitech c270 webcam), listen (webcam onboard microphone), display emotion/reactions (LCD Screen), and look around (3 degrees of freedom via servo motors). We intentionally avoided any physical traveling capabilities in an effort to keep the focus on the interactions of idling, noticing, listening, and reacting. Macklin was responsible for our mechanical and load calculations to ensure our servo motors would be able to support the mass and changing inertia, Gareth was responsible for the mechanical CAD, design for fabrication (3D printing, laser cutting, ball bearings), and assembly, and I was responsible for the electrical design, soldering, software, control system, and interaction design. For control, I designed a PID controller that used openCV to track a trained face. I wrote the software in Arduino (Arduino Mega Microcontroller) and Processing (Windows PC), using websockets to communicate between the two.

Personality

Without voice, it was important for us to give hiBot a means of simply and clearly communicating its state. This is where hiBot’s personality was really able to come through. I created these playful screen animations to allow hiBot to express different levels of paying attention to its human friend, from sleeping to looking around to seeing to listening. The idea was to make hiBot always react to a wake word (think “Ok Google” or “Alexa” or “Hey Siri”) but not bind it to remaining inactive before hearing it. Actually, in the the spirit of realistic social behavior, we planned to have hiBot stop paying attention and look somewhere else after a while if the human friend did something uncomfortable like maintaining eye contact without talking; admittedly, getting this right was a larger effort than we had the time or resources to do, so we ended up erroring on the side of more attentiveness as opposed to less.

Putting it All Together

With some overlap with the software development, we sketched, dimensioned, modeled, fabricated, and assembled hiBot.

Final Thoughts

Unfortunately, hiBot took a fall before our final and only video demo was recorded. While we were able to get it working with on-the-spot rewiring and tape (lots of tape), the incident resulted in very sparse final documentation. That said, I am very proud of the questions we sought out to answer with hiBot and believe we learned a lot about the power of simple interactions and animations to express emotion and empathy, something that I still today believe will be crucial to robots of tomorrow.

This project could not have been completed without my teammates Gareth and Mac.